From robotic vacuum cleaners in our homes to automated floor scrubbers in malls and self-driving taxis, automation will change our experience of daily life in the very near-future. That said, successfully deploying high-functioning and reliable autonomous machines is hard. One particular challenge is Simultaneous Localization and Mapping (SLAM), a prerequisite for any autonomous navigation stack.

SLAM, a prerequisite for any autonomous navigation stack

While there have been significant improvements, many implementations of SLAM remain slow and computationally expensive. Take the spatial calibration of an automated household vacuum cleaner, for example, which can be performed automatically or manually. Automatically, these systems utilize an exploration algorithm while performing SLAM that is both constrained and inefficient. Manually, the setup is performed by piloting the robot around the operating environment.

dConstruct makes data capture easy using 3D digital lidar

Implementing a robust SLAM solution begins with a high-resolution, accurate point cloud. dConstruct, a Singapore based company delivering customizable autonomous navigation solutions, simplified the process, making robots easier to use for their customers across the construction, manufacturing, oil & gas, and smart building industries.

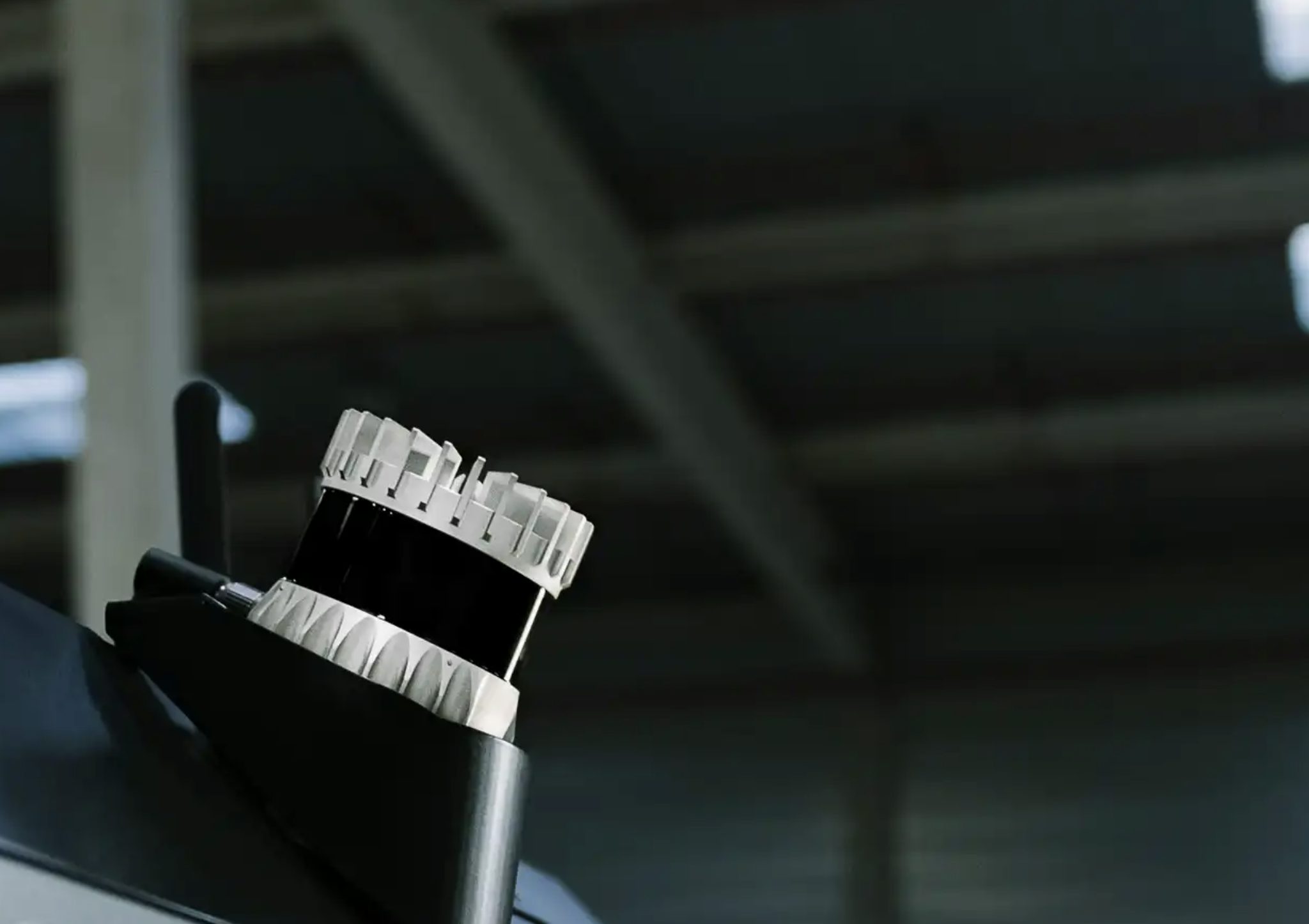

dConstruct’s dASHpack, a lightweight portable backpack for mapping any environment, uses Ouster digital lidar to create highly accurate SLAMs and point cloud maps. This information is then uploaded to their cloud-based Fleet Management Service, from which it can be easily accessed by robots running dConstruct’s autonomous software stack.

The system is controlled by a low-power computer inside the backpack. A retractable pole carries an Ouster OS1-32 sensor, IMU, and four RGB cameras for colouring the data. The OS1, chosen for its balance between cost and data density, outputs distance information with centimeter accuracy and can operate in all lighting conditions, enabling both indoor and outdoor HD mapping and the production of point cloud maps of environments over 500m2 within 20 minutes or less.

Internal software, called dASHXplorer, performs SLAM on the host computer. Using algorithmic and multi-core optimization techniques the software runs SLAM at a faster-than real-time speed in order to produce a real-time point cloud for visual inspection during the SLAM process. The core of the dASHXplorer SLAM algorithm contains an incremental factor graph optimizer exploiting sparse matrices for high-performance while reducing memory consumption. A number of pre-processing steps are run before the core algorithm as illustrated in the below picture.

When used together, dASHPack and dASHXplorer take just 30 minutes to produce a detailed map, greatly speeding up the deployment of autonomous robots in new environments.

Coloring point clouds for Digital Twin applications

Equipped with four RGB cameras, dASHPack is able to color the point cloud map generated from SLAM. Built for real world scalability, dASHXplorer can render extremely large colored point clouds of up to 1 billion points for viewing.

Real-world deployments

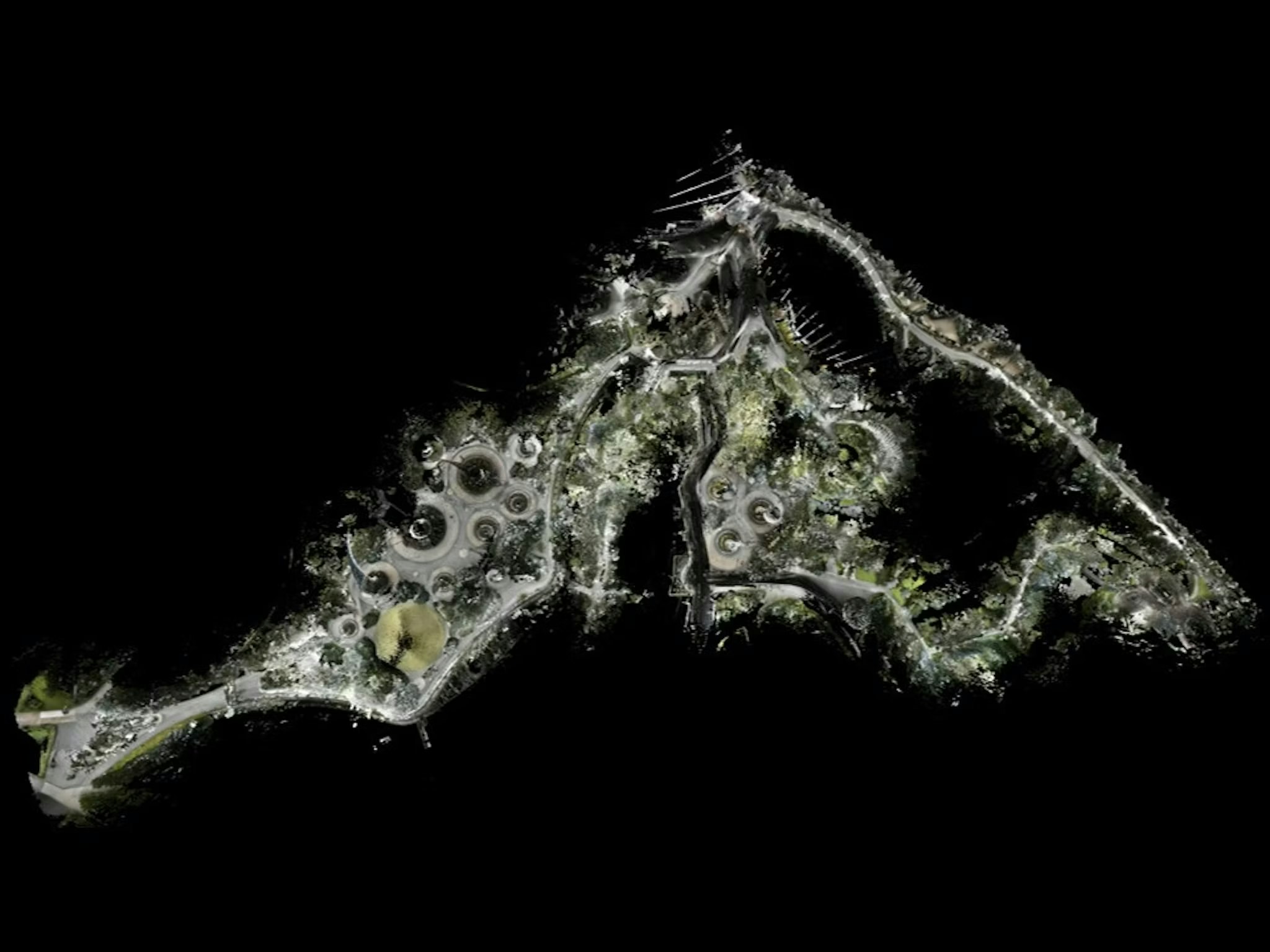

SLAM maps enable the deployment of autonomous robots as well as inspection and reconstruction of working environments. The picture below shows a map of a smart office building, The Galen, in Singapore. The map is used to facilitate the deployment of autonomous robots ranging from cleaning robots to last mile delivery robots.

In a separate use-case, a map of a construction site in Sentosa Singapore was created and deployed to Spot, an autonomous inspection robot for the purposes of remote site inspection.

To learn more about SLAM, dConstruct, or autonomous robotic applications, reach out to our team.