Over the past 12 months, we’ve seen lots of discussion about how lidar doesn’t work in the rain. Mostly it’s been speculation without data, like these comments below:

This was us, reading these tweets:

Working closely with autonomous vehicles, we know that both lidar sensors and cameras play important roles in an autonomous stack. Cameras bring high resolution to the table, where lidar sensors bring depth information. That said, in situations where one of the two sensors may experience degraded performance, such as in the rain, the other sensor can play a role in picking up the slack for a perception system.

So back to these comments on Twitter. They beg the question: has anyone commenting on lidar performance in the rain, actually seen lidar data from a drive in the rain? Our Google searches tell us the answer is almost definitely not, so we figured the best way to make our point would be with a real drive.

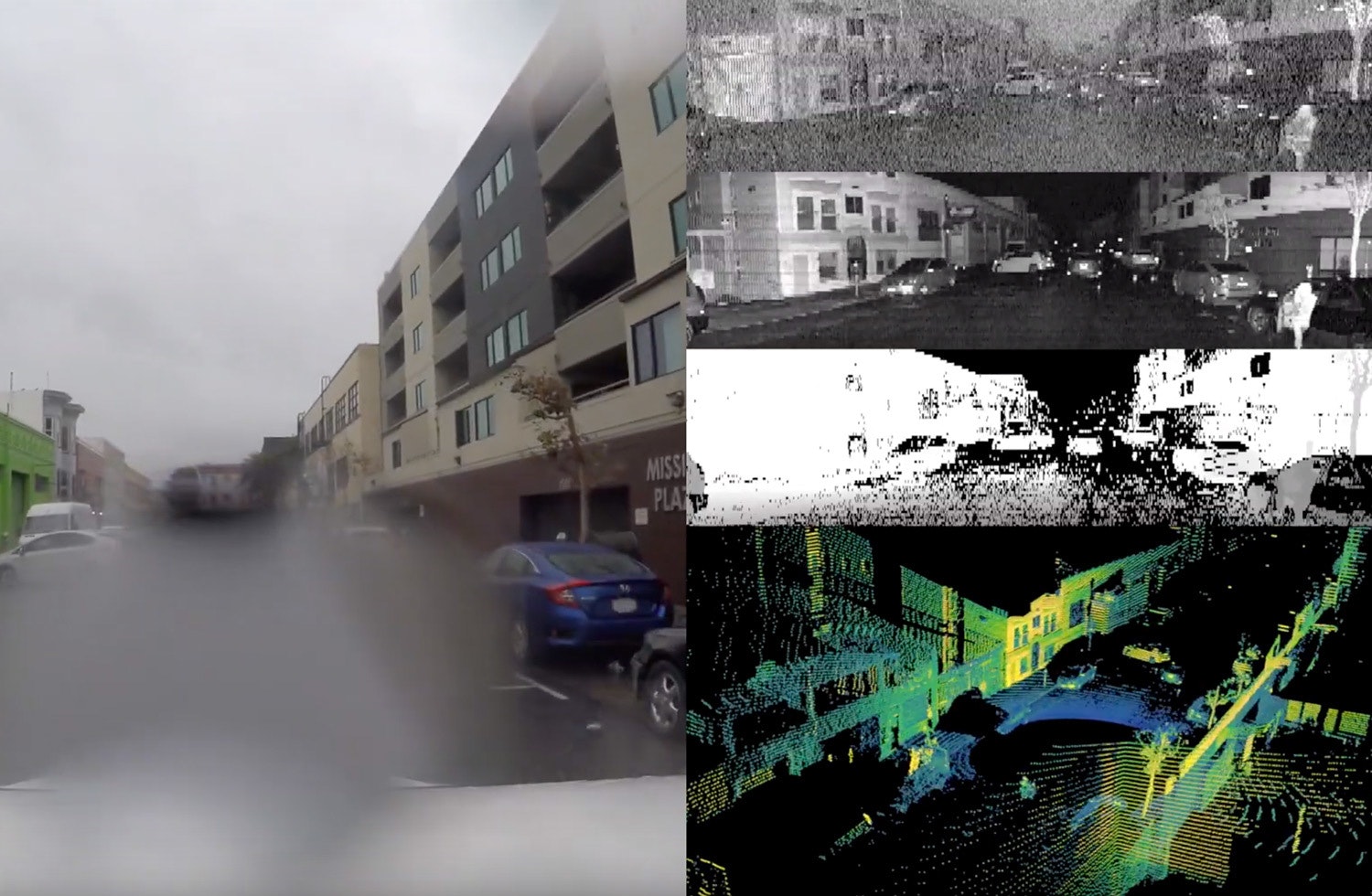

When we got the first rain in San Francisco last month, we recorded a side-by-side camera and lidar sensor drive to demonstrate the minimal impact that rain has on the lidar data, and that the wet conditions are one of many scenarios where having both sensors makes a perception system safer and more robust.

For our demonstration, we placed a GoPro recording 4K video and an OS1 lidar sensor on the top of our car and drove out into moderate, steady rain.

Check out the footage from the drive:

And if you want to explore the raw data for yourself, here’s a 2 minute .bag file from the drive (warning before you click: it’s a 10GB file).

Here’s also a sample configuration file for viewing the data with our visualizer.

The rain affects the camera more than the lidar sensor

In the first view in the video, the three images in the top right are the structured data panoramic images output by the lidar sensor — no camera involved. The top image displays ambient light (sunlight) captured by the lidar sensor. The second image displays the intensity signal (strength of laser light reflected back to the sensor), and the third image displays the range of objects calculated by the sensor.

You can see that water doesn’t obscure the lidar signal and range images, even though there are water droplets on the lidar sensor’s window. The ambient image is a bit grainy because the cloud cover reduces the amount of sunlight, but still shows no impact from the rain.

Larger optical aperture

One of the things that gives the OS1 the unique ability to see through obscurants (in this case water droplets) on the window is the large optical aperture of the sensor, enabled by our digital lidar technology. As we discussed in a prior post, the large aperture allows light to pass around obscurants on the sensor window. The result is that the range of the sensor is reduced slightly by the water, but the water does not distort the image at all.

The large aperture also allows the sensor to see around the falling rain drops in the air. There is virtually no falling rain picked up by the sensor, despite the steady rainfall. This can be seen most clearly in the second half of the video.

The camera on the other hand has an aperture much smaller than the size of the rain drops. As a result, a single bead of water can obscure large areas in the field of view that may contain critical information. To combat this distortion, automakers have developed cleaning solutions to prevent water and dirt buildup on camera lens.

Shorter exposure time (faster shutter speed)

Exposure time is another important differentiator between lidar sensors and cameras that affects performance in rain. Both human eyes and cameras have long exposure times measured in thousandths of a second, which makes falling water drops appear as large streaks in an image and rain appear denser than it actually is. A lidar sensor has an ultra-fast exposure measured in millionths of a second, so rain is effectively frozen in place during a measurement and has a low likelihood of being detected as a streak across multiple pixels.

Digital return processing

Finally, the nature of how our digital lidar sensors process returns gives them an advantage in seeing through obscurants at specific ranges like rain. Our sensors are “range gated imagers”. Instead of integrating all light returning from the direction of a pixel like a camera, our sensors integrate photons into a series of “range gates” (in other words, we create a photon time series).

From that time series, we pick out only the strongest signal return for each pixel in the sensor. This allows us to ignore the signals that occur at other ranges, like reflections off of raindrops or even a wet sensor window.

A camera by contrast, has no ability to distinguish between the rain in the air and a hard object behind it. All the light is integrated into a single value for each pixel, with no concept of multiple signal returns. The OS1 is able to ignore the signal returning from a rain drop and pick out the stronger signal from, for example, a building. A camera, instead of ignoring the signal from a raindrop, combines the signal from the raindrop with the signal returning from a building behind the raindrop to create one combined return. These combined returns appear as distortions.

Due to the aperture size and the shutter speed, the lidar sensor experiences significantly less image distortion than the camera. That said, the lidar sensor wasn’t completely unaffected — in wet conditions, light reflects off the wet road, posing a challenge for both the camera and the lidar sensor.

Water turns the road into a specular surface

On a wet road, the water acts as a specular, mirror-like surface that reflects light in a very different pattern than in dry conditions. This reflection creates challenges for both sensors, creating confusing reflections for the camera and reducing the range of the lidar sensor. On a dry road, the rough asphalt of the road will diffuse light, sending laser light bouncing in all directions. But on a wet road, the water turns the road into an imperfect specular surface, acting like a mirror reflecting a portion of the light.

For a camera, the challenge comes from reflections that appear on the road that may create confusing mirror images of objects. In the image below, you can see the headlights of a car reflecting off the road surface. For a camera with object recognition software, this can potentially confuse the system into thinking that a second car may be present, or that the car may be closer than it actually is.

For the lidar sensor, the adverse impact was that the range of the sensor was reduced on the surface of the road. A portion of the laser light emitted by the lidar sensor reflects off the water on the road and away from the sensor. This means that the sensor is less able to see the road surface at long ranges. That said, the range of the sensor is unaffected on all other objects (cars, buildings, trees, etc.).

You can see this effect at play in the gif below that compares conditions on a rainy day vs. a dry day. The buildings show little change in visibility in the rain, but the range on the road surface is reduced in the rain due to the specular reflection of the road.

Not all lidar is created equal

The strong performance of Ouster sensors in the rain is not necessarily representative of all lidar sensors. Our sensors are engineered to withstand the abuse and unpredictable conditions that come with commercial deployments — through rain storms, being dunked in water, car washes, power washers, and automotive-grade shock, and vibration.

Of other commercially available lidar technology, both legacy analog spinning lidar sensors and MEMS lidar sensors tend to struggle more with rain due to their small apertures. Like the camera in our demonstration here, traditional sensors will have their output distorted by small droplets. Additionally, no legacy analog lidar or MEMS units are rated higher than IP67 (our sensors are all rated to IP68 and IP69K standards), nor have they passed standard automotive-grade shock and vibration testing, making them prone to failure when put on bumpy roads or in wet conditions.

Diversity is key

This rain demonstration highlights how different environmental conditions can have an asymmetrical impact on sensor performance. It is not meant to bash or in any way imply cameras are unnecessary. Cameras provide critical data, in combination with lidar and radar data, to power autonomous vehicle perception stacks.

The rain, though, shows situations where camera performance may be more degraded than lidar performance. In these types of situations, having a diverse set of sensors reduces the risk of error in perception performance. Diversity of sensors is critical for making autonomous vehicles safe and ubiquitous.

Look out for more footage of our sensors in tough conditions in the coming year.