100,000 Automated Guided Vehicles (AGV) and Autonomous Mobile Robots (AMR) were shipped globally in 2021 alone. In terms of revenue, AMRs generated $1.6 billion and AGVs grew to $1.3 billion(1). And these markets are rapidly growing, with t for AGVs and AMRs projected to grow to $18B by 2027(2). Powering this growth, there’s a field of robotics software that has kept advancing for decades but is still foreign to many – Simultaneous Localization and Mapping (SLAM).

One might think that SLAM is only for advanced industrial autonomous robots, but it’s even more ubiquitous than most imagine. For example, many households now use an autonomous vacuum cleaner that has a built-in lidar sensor and uses SLAM to clean floors. There are also last-mile delivery robots and warehouse robots that utilize SLAM to adapt quickly to the changing operating environment.

SLAM, in the most simplistic explanation, is a method to create a map of the environment while tracking the position of the map creator. This mapping and positioning method is the key piece in enabling robots to autonomously know their current location in space and navigate to a new location.

As with many technologies like Artificial Intelligence, SLAM benefits from new advancements, such as lidar and edge computing, to continuously evolve and push boundaries. SLAM has many variants, each with advantages and shortfalls depending on the use case. In this blog post, we’ll walk through SLAM in simpler terms to help you understand the technology and make better decisions in adopting autonomous technology.

What is SLAM

Imagine yourself waking up in a foreign place. What would you do? You would probably start to look around to locate where you are. Along the way, you would mark some objects to help you remember where you are going. You would be making a mental map, drawing how the world looks around you and where you are in that world. This is what Simultaneous Localization and Mapping (SLAM) does. SLAM is an essential piece in robotics that helps robots to estimate their pose – the position and orientation – on the map while creating the map of the environment to carry out autonomous activities. Let’s break it down and go over what localization and mapping each mean.

Localization

When an autonomous robot is turned on, the first thing it does is identify where it is. In a typical localization scenario, a robot is equipped with sensors to scan its surroundings and monitor its movements. Using the inputs from the sensors, the robot is able to identify where it is on a given map. In some cases, a tracking device like a GPS is used to assist the localization process (this is how Google can give you the blue dot on Google Maps, for example).

Mapping

While it sounds simple, creating a map is not an easy task, especially for a robot. To get started, a type of visual detector, like a camera or a lidar sensor, is used to record the surroundings. As the robot moves, it captures more visual information and tries to make connections and extract features – like a corner – to mark some identifiable points. However, some of the features could look very similar and be difficult for a robot to distinguish which is which. This is when localization becomes useful for creating more accurate maps via SLAM.

Why use SLAM

Let’s dive deeper into why SLAM is useful for autonomous robots by using the vacuum cleaner example. Imagine a typical living room where there is large furniture like a couch that is pretty stationary in its location. smaller items like a coffee table that could move around the room frequently and the room walls that never move (unless you’re in the middle of a big remodel!). To clean the room, the autonomous vacuum cleaner would need a map to navigate itself through the space.

The map can be fed to the vacuum in different ways. One way is to manually create and upload the map to the machine, requiring you to redraw the map whenever a piece of furniture is moved. Another method is redrawing the map brand new every time the vacuum is turned on, which would be very resource heavy and inefficient for a remote household device. This is when SLAM shines.

By utilizing SLAM, the vacuum cleaner is able to update just the places that have changed from the previous scan. As a result, it can carry out the main job of sweeping the floor more efficiently. In this example, the robot could rely on the walls for a consistent reference, but when a piece of furniture is in an unexpected location, the robot will update the map. A similar benefit applies to other autonomous robots, like the last-mile delivery robots. The general map of the neighborhood can be provided to the delivery robots, but the robots can respond to any new objects like parked cars or pedestrians that they see with SLAM.

SLAM can also be used when it’s impossible to get a map to begin with – for example, a mineshaft, volcanic tunnel, or Mars! An autonomous robot is able to examine such unknown conditions, create an accurate map, and share the results with controllers for further research or explorations.

How to achieve SLAM

As briefly described, localization and mapping are tied very closely and work better when used together. In fact, cooperation is possible because they both utilize features extracted from the surrounding environment. But what is a feature? In the previous section, we used a corner or a plane as an example of a feature. At a high level, they are features because they are distinct visual information that can be pointed out on a map. However, extracting a feature is quite different between a camera-based method and a lidar-based method.

Camera-based SLAM

When a camera is used to capture a scene, it is able to record high-resolution images with rich details. A computer vision algorithm, such as SIFT (scale-invariant feature transform) or ORB (Oriented FAST and Rotated BRIEF), can be used to detect features. For example, if a robot is using a camera to map a warehouse, the recorded pictures might have pallets, shelves, and doors. By examining the differences in the color of the neighboring pixels, the robot can identify that there are different objects in the scene.

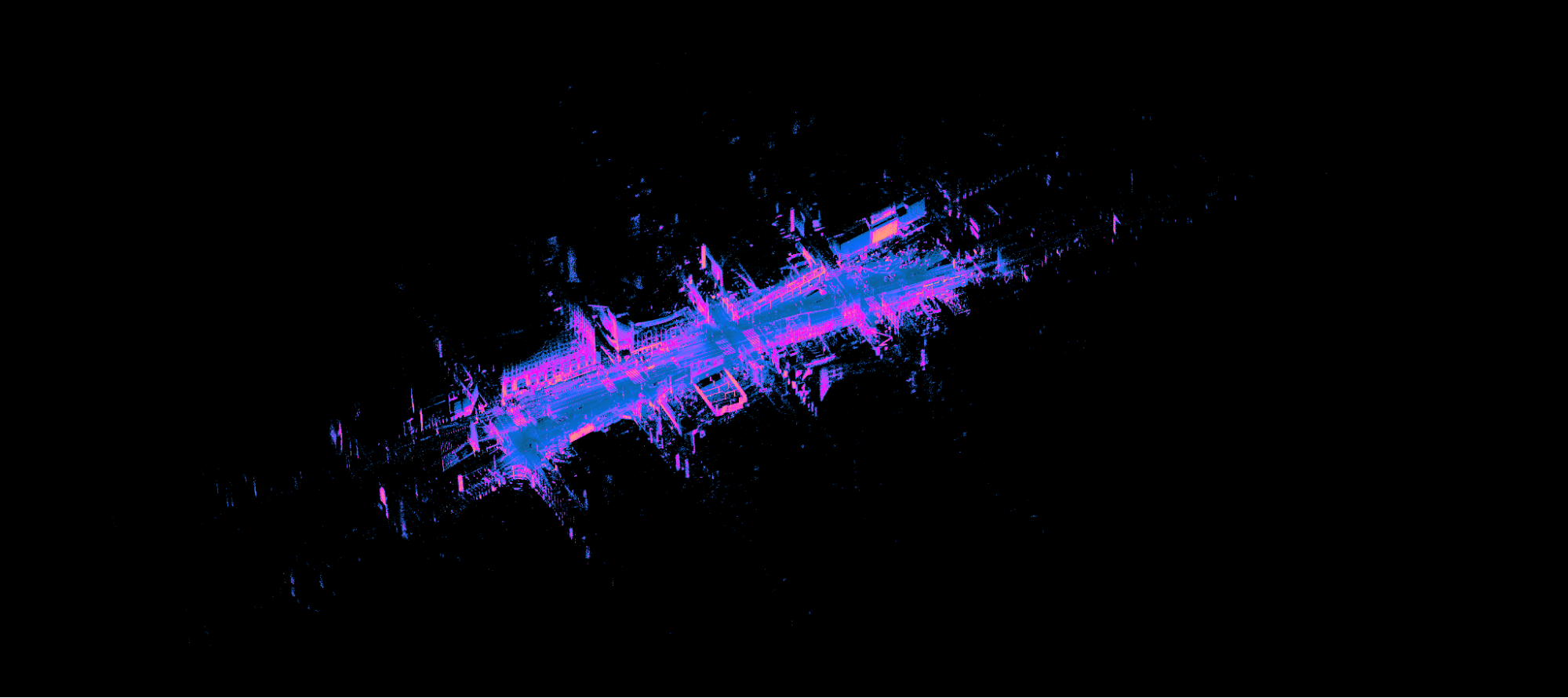

Lidar-based SLAM

Source: Robust Place Recognition using an Imaging Lidar by Shan, et al.)

Unlike the camera-based SLAM, a lidar sensor natively collects information about the depth and geometry of the objects captured in the scene. If the same warehouse robot above were using a lidar sensor instead of a camera, it would use the distances and shapes returned to the sensor to identify objects. In fact, a lidar-based SLAM uses edges and planes recorded through the device as features instead of neighboring pixels to connect the visual information and create a map. Unlike cameras, this approach is far better at creating a digital twin copy of the map in 3D, which is more realistic.

How to choose the right SLAM

There are various SLAM methods that function quite differently. Moreover, each method has advantages and disadvantages depending on the use cases. Let’s look at some distinctions that would help in deciding on a SLAM algorithm.

One category is the output of the SLAM algorithm. For example, if you are at a park, would you want to know the relative position of trees and benches (topological method) or the exact distances between them (metric method)? Furthermore, do you plan to recreate a digital copy of the park (volumetric method) or just enough information to distinguish objects (feature-based method)? As you can imagine, some output would require heavy resources and even different types of sensors to carry out the job.

Another factor is the nature of the environment. Is the place you are running a SLAM algorithm a static place that will not change over time or a dynamic place that would require updates? Imagine a robot strolling down an empty aisle at a warehouse but finding stacks of pallets on the way back. Would it be able to close the loop and make the connection that it is in the same place but with just different objects added?

Last but not least is the multi-robot instance. When there are multiple robots autonomously navigating inside a warehouse, it could face different challenges. One challenge is the ability to create a clean map without other robots. How do you make sure that each robot knows the others’ positions to be able to exclude them in mapping? If robots can communicate with each other, can each robot create smaller maps locally and share them with a central system to create a whole map?

As you can see, there is no single SLAM algorithm that caters to all use cases. However, SLAM is still evolving with advancements in technology. To be successful, one needs to truly understand the use case and be flexible in testing different algorithms to find the best solution.

What comes after SLAM

In the beginning, we used the example of waking up in a foreign place to explain that SLAM is the act of drawing a map of the surroundings and locating where you are on the map. However, this excludes the “what” on the map. We know a tree when we see one, but robots don’t. We need to let them know that the tall object with scatters of dots around the center in front of them is in fact a tree. This addition of semantics to the SLAM output can increase the level of autonomy a robot can accomplish. For example, a robot can maneuver around the warehouse more safely if it can identify humans and slow down near them to prevent accidents from sudden movements.

With the developments in machine learning, adding semantics to SLAM is becoming more accessible, further expanding the use cases for AGVs and AMRs. There are autonomous forklifts that can identify a pallet and its holes to be able to transport one, and autonomous excavators that can move to specific locations to dig the soil.

Conclusion

We went over what Simultaneous Localization and Mapping is, the different algorithms to achieve SLAM, and the addition of semantics that enhances the level of autonomy. As you’ve witnessed, SLAM isn’t an easy concept to understand nor is it easy to apply to a use case even for an experienced practitioner. There are numerous considerations to carefully examine before implementing one.

The field of SLAM is continuously evolving and more optimized methods are being introduced to enable SLAM in different applications. Here are some additional resources you might find interesting:

- Upcoming webinar: Diving Deeper into Lidar SLAM

- Previous webinar: Zero to SLAM in 30 minutes

- Blog post: Lidar Mapping with Ouster 3D Sensors

If you are interested in learning more about how SLAM might be used in your situation, reach out to us, and we’d be happy to assist!