With new firmware updates the OS1 blurs the line between lidar and camera. Take it for a spin.

It was clear when we started developing the OS1 three years ago that deep learning research for cameras was outpacing lidar research. Lidar data has incredible benefits – rich spatial information and lighting agnostic sensing to name a couple – but it lacks the raw resolution and efficient array structure of camera images, and 3D point clouds are still more difficult to encode in a neural net or process with hardware acceleration.

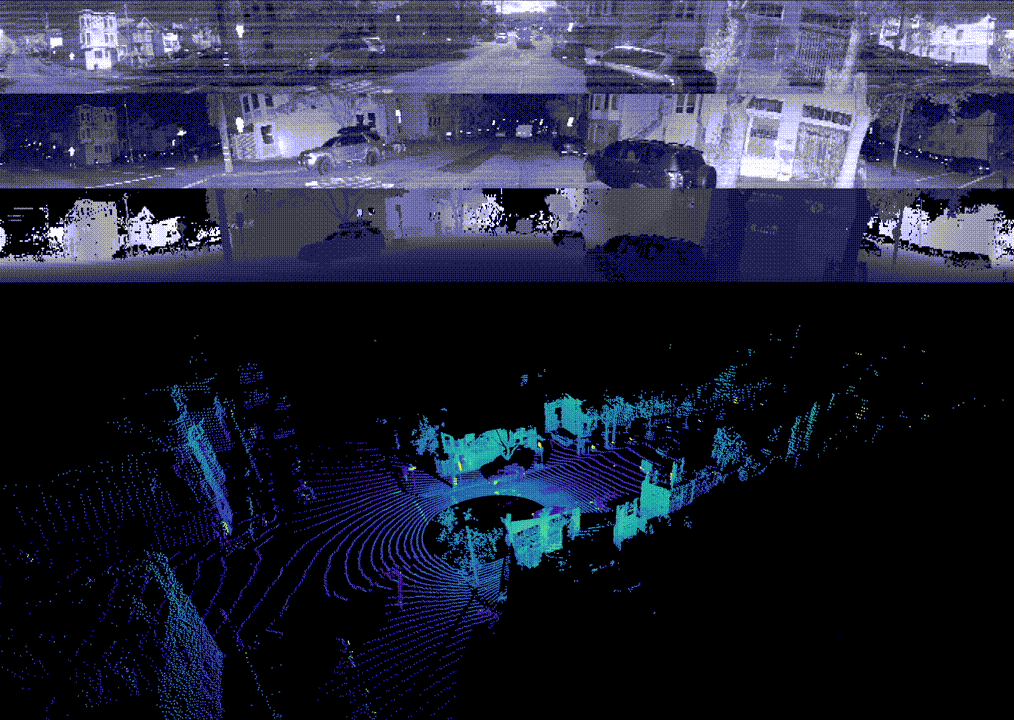

With the tradeoffs between both sensing modalities in mind, we set out to bring the best aspects of lidars and cameras together in a single device from the very beginning. Today we’re releasing a firmware upgrade and update to our open source driver that deliver on that goal. The OS1 now outputs fixed resolution depth images, signal images, and ambient images in real time, all without a camera. The data layers are perfectly spatially correlated, with zero temporal mismatch or shutter effects, and have 16 bits per pixel and linear photo response. Check it out:

The OS1’s optical system has a larger aperture than most DSLRs, and the photon counting ASIC we developed has extreme low light sensitivity so we’re able to collect ambient imagery even in low light conditions. The OS1 captures both the signal and ambient data in the near infrared, so the data closely resembles visible light images of the same scenes, which gives the data a natural look and a high chance that algorithms developed for cameras translate well to the data. In the future we’ll work to remove fixed pattern noise from these ambient images, but we wanted to let customers get their hands on the data in the meantime!

We’ve also updated our open source driver to output these data layers as fixed resolution 360° panoramic frames so customers can begin using the new features immediately, and we’re providing a new cross-platform visualization tool built on VTK for viewing, recording, and playback of both the imagery and the point clouds side by side on Linux, Mac, and Windows. The data output from the sensor requires no post processing to achieve this functionality – the magic is in the hardware and the driver simply assembles streaming data packets into image frames.

Customers who have been given early access to the update have been blown away, and we encourage anyone curious about the OS1 to watch our unedited videos online, or download our raw data and play it back yourself with the visualizer.

Firmware update page: https://www.ouster.com/downloads

Github & sample data: https://github.com/ouster-LIDAR

This Is Not a Gimmick.

We’ve seen multiple lidar companies market a lidar/camera fusion solution by co-mounting a separate camera with a lidar, performing a shoddy extrinsic calibration, and putting out a press release for what ultimately is a useless product. We didn’t do that, and to prove it we want to share some examples of how powerful the OS1 sensor data can be, which brings us back to deep learning.

Because the sensor outputs fixed resolution image frames with depth, signal, and ambient data at each pixel, we’re able to feed these images directly into deep learning algorithms that were originally developed for cameras. We encode the depth, intensity, and ambient information in a vector much like a network for color images would encode the red, green, and blue channels at the input layer. The networks we’ve trained have generalized extremely well to the new lidar data types.

As one example, we trained a per pixel semantic classifier to identify driveable road, vehicles, pedestrians, and bicyclists on a series of depth and intensity frames from around San Francisco. We’re able to run the resultant network on an NVIDIA GTX 1060 in real time and achieved encouraging results, especially considering this is the first implementation we’ve attempted. Take a look:

Because all data is provided per pixel, we’re able to seamlessly translate the 2D masks into the 3D frame for additional real time processing like bounding box estimation and tracking.

In other cases, we’ve elected to keep the depth, signal, and ambient images separate and pass them through the same network independently. As an example, we took the pre-trained network from DeTone et al.’s SuperPoint project [link] and ran it directly on our intensity and depth images. The network is trained on a large number of generic RGB images, and has never seen depth/lidar data, but the results for both the intensity and depth images are stunning:

On careful inspection, it’s clear that the network is picking up different key points in each image. Anyone who has worked on lidar and visual odometry will grasp the value of the redundancy embodied in this result. Lidar odometry struggles in geometrically uniform environments like tunnels and highways, while visual odometry struggles with textureless and poorly lit environments. The OS1’s camera/lidar fusion provides a multi-modal solution to this long standing problem.

It’s results like these that make us confident that well-fused lidar and camera data are much more than the sum of their parts, and we expect further convergence between lidars and cameras in the future.

Look out for more exciting developments from Ouster in the future!

Links

1. Videos:

Sunny drive with ambient data:

- Forward View: https://www.youtube.com/watch?v=9qYwROaCxF4

Oblique View: https://www.youtube.com/watch?v=LcnbOCBMiQM

Pixelwise semantic segmentation: https://www.youtube.com/watch?v=JxR9MasA9Yc

Superpoint: https://www.youtube.com/watch?v=igsJxrbaejw

2. Firmware update page: https://ouster.com/downloads

3. Github & sample data: www.github.com/ouster-LIDAR