Mobility industry experts often discuss the enormous possibilities and daunting challenges of building an autonomous vehicle company, but they’ve spent relatively little time discussing what the architecture of an autonomous vehicle actually looks like. Given we at Ouster spend our days building sensors that play an important role in the AV sensor/compute stack, we wanted to share a quick overview of the current state of autonomous vehicle architecture. For an even deeper dive, check out our case study with Teraki.

First, a bit of background for those new to autonomous vehicles.

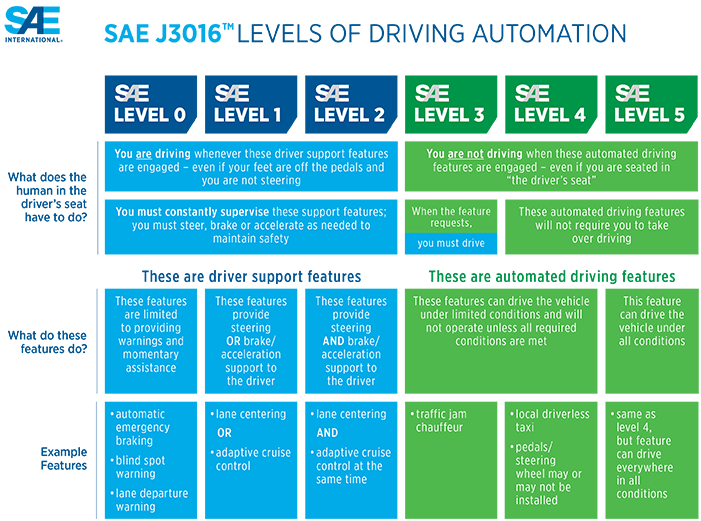

A five-step path to fully autonomous vehicles is laid out by the Society of Automotive Engineers.

Today, commercially deployed vehicles achieve level 2 automation (or now sometimes called “2+”) and rely on a sensor suite of cameras, a front-facing radar, and low-resolution lidar. Behind this simple suite of sensors is a very basic processing stack.

To advance towards SAE level 4 and 5, the industry needs to solve a few key technical hurdles:

- Accurate and robust perception and localization in all environments

- Faster real-time decision making in diverse conditions

- Reliable and cost-effective systems that can be commercialized in production volumes

Overcoming these problems requires a new hardware stack with a more powerful set of sensors and faster computing infrastructure that is also commercially viable in both cost and scalability.

Hardware: the sensors

Lidar

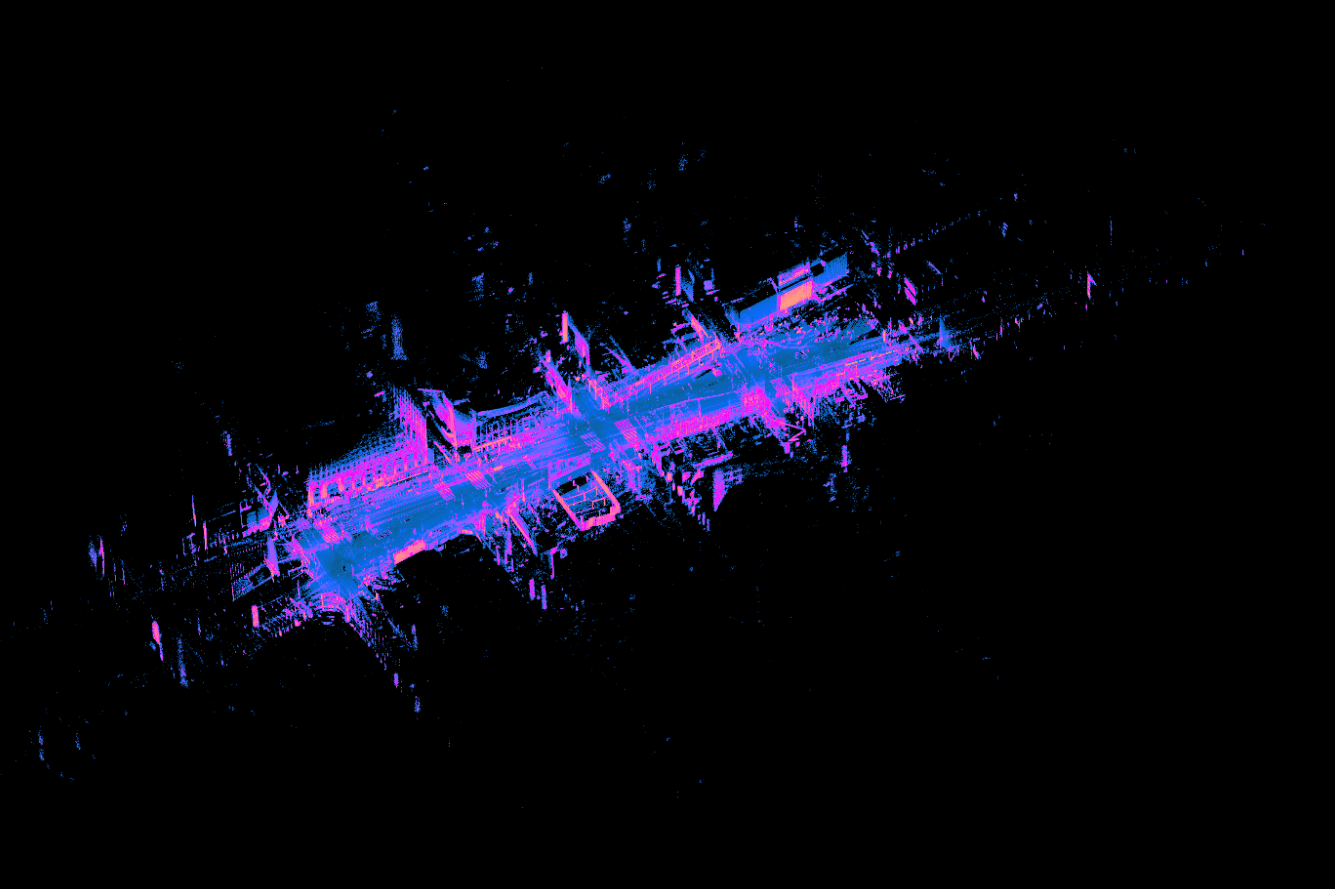

Lidar is used on all level 4 or level 5 prototype vehicles due to its ability to provide accurate depth and spatial information about the environment. This data, represented in a 3D point cloud, augments camera and radar data to more quickly and accurately classify objects around the vehicle.

Pros: Highly accurate and precise depth (range) information, works day or night, agnostic to lighting and most external conditions, mid-level resolution (much higher than radar, lower than camera)

Cons: High data rate, higher cost today, larger form factor

Integration:

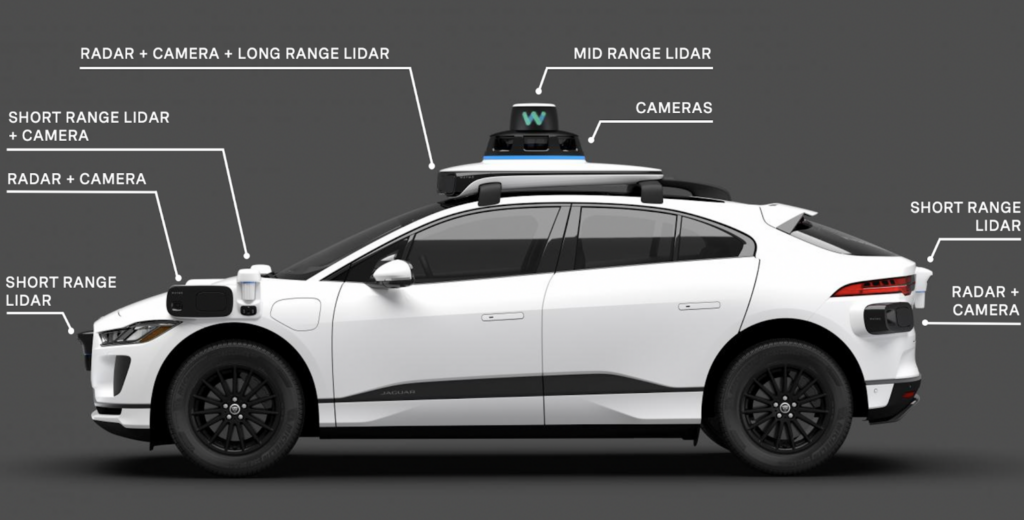

- 4 short-range lidar sensors around the edges of the vehicle, used for identifying potential risks immediately around the vehicle (ie. small animals, cones, curbs) that may be even missed by human drivers. This setup would include one sensor on the grill of the vehicle, two sensors near the side-view mirrors, and one sensor on the tailgate of the vehicle.

- 2 mid-range lidar sensors placed at an angle on the edges of the roof of the vehicle, used for mapping and localization.

- 2 long-range lidar sensor placed at the top of the vehicle, used for detecting dark objects and potential obstacles in front of the vehicle when it is traveling at high speeds. There are usually two of these sensors for redundancy, and they can be either 360 or forward facing sensors.

Camera

Cameras provide the traditional core of an autonomous vehicle perception stack. Level 4 or 5 vehicles have up to 20+ cameras placed around the vehicle that are then aligned and calibrated to create an extremely high definition 360º view of the environment.

Pros: High resolution and full color in a 2D array, inexpensive, easy to integrate (can be nearly hidden in the car), sees the world in the same way humans do

Cons: Dependent on light from external sources and sensitive to variable light conditions, susceptible to adverse weather conditions, 360º view requires computationally intensive work to stitch images

Radar

Over the past 15 years, radar has become commonplace in automotive applications. Radar provides 3D point clouds of an environment using radio waves as a frequency-modulated continuous wave.

Pros: Depth information of objects and environment, inexpensive, robust, immune to adverse weather conditions (rain, snow), long range

Cons: Low resolution, false negatives with stationary objects and critical obstacles

Integration:

- For level 4 and level 5 autonomous vehicles, radar is placed 360º around the vehicle and serves as a reliable redundant unit for object detection

- Up to ~10 radars, including on the side-view mirrors, in the front grill, back bumper, or on the corners of the vehicle.

All three of these above sensors have their pros and cons, but in combination they create a highly robust suite of sensors that enables level 4 and level 5 autonomous driving.

Given the number of sensors being deployed on these vehicles, the processing requirements are tremendous. Next let’s look at different ways to tackle this challenge.

Hardware: the processors

There are two main approaches for handling the tremendous amount of data generated by the sensor suite: centralized processing or distributed (edge) processing.

Centralized processing

With centralized processing, all raw data from the sensor is sent to and processed in a single central processing unit.

Pros: Sensor side is small, low cost, and low power

Cons: Need for expensive chipsets with high processing power and speed, potentially high application latency, adding more sensors require additional processing demands (which can quickly become a bottleneck)

Distributed (edge) processing

With distributed processing, the sensor module with an application processor does the high level of data processing and local decision-making can be done at the edge. Only the relevant information from each sensor is sent to a central unit, where it is compiled and used for analysis or decision making.

Pros: Lower bandwidth, cheaper interface between the sensor modules and central processing unit, lower application latency, lower processing power, adding more sensors will not drastically increase performance needs

Cons: Higher functional safety requirements

A distributed processing model for vehicles is becoming the preferred method in order to simultaneously process the growing number of data-intensive sensor signals in real-time.

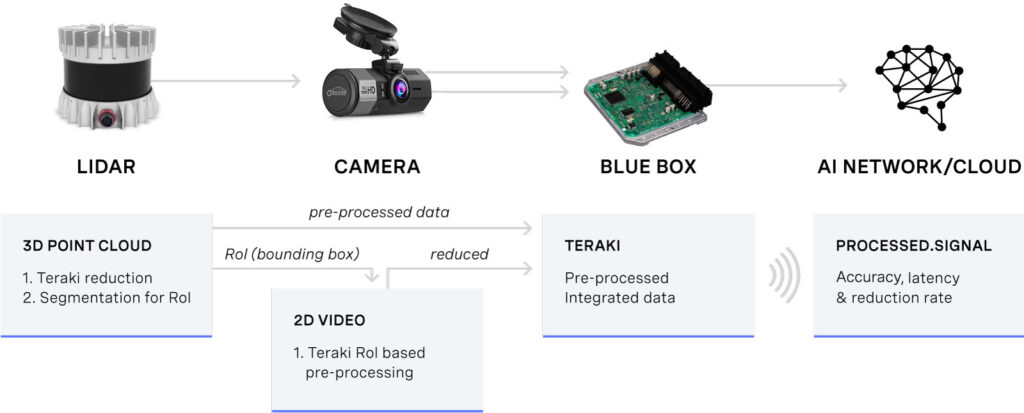

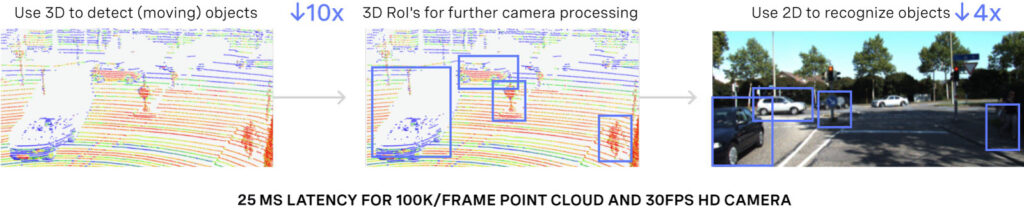

To illustrate the advantages of this approach, we used software from Teraki to fuse an Ouster lidar sensor and an HD camera.

Our solution: Teraki software with Ouster LIDAR

Ouster lidar generates high-resolution point cloud data that maps the environment around the sensor. The high-density information produced by the lidar sensor (as high as 250 Mbps for 128 channel sensors) makes rapid processing challenging for the car’s hardware. Combining this with the complex calculations needed for video processing to make accurate decisions with near real-time latency is a daunting challenge for autonomous driving use-cases.

Teraki’s embedded edge compute sensor fusion product helps to solve this challenge with embedded, intelligent selection of sensor information. The software detects and recognizes the regions of interest from Ouster’s 3D point cloud, compresses them, and passes only the relevant information to the central computing unit. The detected region of interest is passed to the camera and overlaid onto the video data.

Using this model for pre-processing on the edge results in improved mapping, localization and perception algorithms. This means safer vehicles that make better, near real-time decisions – a step towards level 4 and 5 autonomous vehicles.

This post is adapted from a case study that was written in collaboration with Teraki. Check out the case study with Teraki to learn more about how AV companies are architecting the next generation of vehicles. Visit www.teraki.com for more information on their edge computing solutions for autonomous vehicles.